Unpacking Buzzwords.

"Lakehouses", "Lakebases", "Meshes", and "Medallion Architectures". Regardless of the "buzzword" being used, it's essential to understand the underlying methodology, as these ones all follow the same foundational Data Lake methodology — yet the core business question often remains unanswered or simply assumed. Before committing to a data journey that typically spans 3–5 years and costs in excess $25 million — an approach frequently promoted by industry quadrants, shaping strategic architecture decisions — maybe worth considering the following points:

Replication

Most data solutions today are fundamentally flawed because they rely on rigid, complex data pipelines that replicate or copy data from source systems into a central data lake or warehouse. While this may have made sense a decade ago, in today’s AI-driven landscape, it introduces major inefficiencies. These pipelines create an additional layer of infrastructure that is not only expensive to build and maintain, but also scales poorly as data volumes — and AI workloads — continue to grow.

The world is generating unprecedented volumes of data, across every function and system, yet the traditional approach treats all data equally: copy it, store it, and hope it becomes useful later. The result? Huge costs, significant latency, and teams stuck managing infrastructure instead of driving insights. In the age of AI, we need a fundamentally different approach.

Bottom line: Are we confident this is still the right approach? Should we be focusing more on business-relevant data, rather than potentially including unnecessary noise?

Catalogues

Cataloguing data that has simply been replicated from source systems requires specialist knowledge. Most business users interact with the system through the user interface and aren’t familiar with the underlying database fields or their purposes. Proper data governance, however, demands classification down to the field level. Yet, this is often seen as impractical, leading teams to default to table-level classification instead. True field-level classification requires a clear understanding of:

- The type of data (master, reference, or transactional)

- Whether the data is subject to privacy regulations

- The sensitivity of the data within the organisation

- Any applicable legal or regulatory requirements (e.g. SOCI compliance)

Despite this, the industry focus has shifted toward identifying so-called “critical” data. In reality, around 80% of the data is irrelevant, kept only for “just in case” scenarios. This often leads business users to question the value of the effort—especially when more immediate concerns are competing for attention. Without clearly defined data ownership, securing business engagement and buy-in becomes a significant challenge.

Bottom line: Perhaps we should consider whether it’s reasonable to expect business domains to catalogue data they may have never encountered before. This might be a knock-on effect of the broader Data Lake approach, and could be worth re-evaluating.

Products

Building data products involves identifying the data that’s truly relevant to the business and transforming it into curated datasets — essentially modelled, tabular views — that can be shared and reused across business units. This process requires domain-specific data engineering and modelling expertise, which is increasingly scarce and expensive. Examples of such modelled data products include:

- Invoice data product from Finance – SAP

- Work Order data product from Finance – SAP

- Purchase Order data product from Finance – SAP

- Vendor Contract data product from Procurement – SAP

- Work Task data product from IT – JIRA

- Customer Survey data product from Customer team – Website

- Customer Activity data product from Customer team – CRM

- Asset Maintenance Plan data product from Asset team – SAP

- Asset Inspection data product from Operations – Inspection App

- Asset IoT data product from Asset team – Sensor Apps

Bottom line: Data products—such as business objects from applications—are ultimately an interpretation of what we believe the customer needs. Often, they’re shaped by assumptions, and while well-informed, they may still represent our best guess.

Layers

Controlling the duplication of master data across business domains becomes nearly impossible with the democratisation of data. As each domain takes ownership of its own data, multiple systems often end up holding versions of the same master data—raising questions about which source is truly authoritative. This challenge is further complicated by the common practice of replicating source data into a data lake.

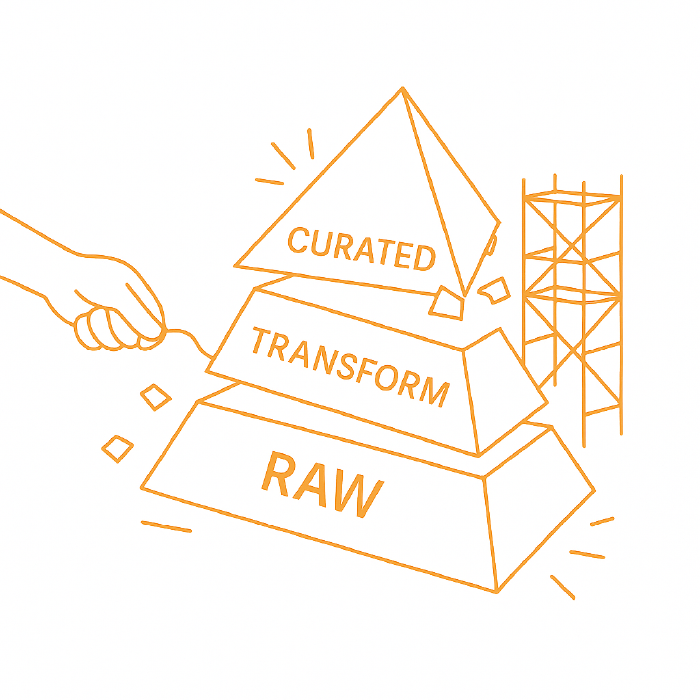

Typically, the Medallion Architecture consists of three layers:

- Raw Layer – a direct copy of the source data

- Transform Layer – where basic cleansing and minor transformations occur

- Curated Layer – where modelled, business-ready data (final data products) is made available to users

Bottom line: Is it worth reconsidering whether we truly need three layers, especially if a single, well-designed layer could meet our needs? Traditional approaches may not always align with the agility required in today’s AI-driven landscape.

Usage

Using these data products assumes the business user will consult the data catalogue to understand each product’s contents and decide which are relevant. This requires a degree of technical proficiency—to connect to datasets, extract and combine the data, and integrate it with other products they deem useful. The user must also be able to prepare and link the data to carry out meaningful analysis. In the end, this still results in descriptive, “what happened” analytics, limited by the predefined structure and scope of the available data products.

Bottom line: It might be worth reflecting on how the responsibility for building queries, reports, and dashboards has shifted toward the business. Is this the most effective setup, or is there an opportunity to rebalance the support between IT and business teams?

Outcome

When you take a step back and look at this linear, phased approach, it quickly becomes clear: it offers no real guarantee of business value at the end. There’s a significant upfront investment — in time, money, and effort — with little to show for it along the way. It’s a high-risk model built on deferred outcomes. And let’s be honest, that’s the opposite of Agile.

So, we challenge you to think differently—by focusing on what the business truly needs:

- Fewer data processing layers

- To solve real use cases or business problems

- Reduced reliance on specialist technical skillsets

- Unrestricted access to data across the organisation

- High-quality data delivered with the right business context

- The ability to answer all analytical questions, not just a limited set of predefined ones

- To avoid the super swamp!

Bottom line: Considering natural human behaviour—and the growing role of AI—there’s a real risk of drifting into a complex and overwhelming data landscape. But if that’s the direction things are heading, there’s still time to course-correct. We’d be happy to help you explore a clearer, more sustainable path forward. Join us